Introduction to linear algebra#

KEYWORDS: numpy.transpose, numpy.eye, numpy.diag, numpy.tri, @, numpy.transpose, numpy.allclose, numpy.linalg.det, numpy.linalg.inv, numpy.linalg.matrix_rank, numpy.linalg.cond, numpy.linalg.solve

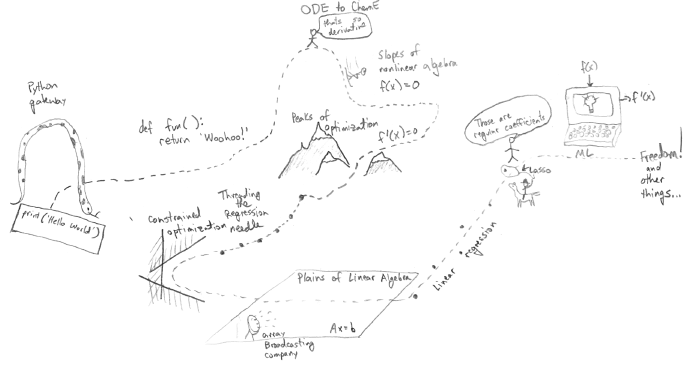

Just a reminder of where we are! We are now in the Plains of Linear Algebra.

Multidimensional arrays#

The foundation of linear algebra in Python is in multidimensional arrays.

import numpy as np

We make multidimensional arrays by using lists of lists of numbers. For example, here is a 2D array:

A = np.array([[1, 2], [3, 4]])

We can find out the shape of an array, i.e. the number of rows and columns from the shape attribute. It returns (rows, columns).

A.shape, A.size, len(A)

((2, 2), 4, 2)

for row in A:

print(row)

[1 2]

[3 4]

A[1]

array([3, 4])

Constructing arrays#

You can always make arrays by typing them in. There are many convenient ways to make special ones though. For example, you can make an array of all ones or zeros with these:

np.zeros(shape=[3, 3])

array([[0., 0., 0.],

[0., 0., 0.],

[0., 0., 0.]])

np.ones(shape=[3, 3])

array([[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.]])

You can make an identity matrix with:

np.eye(N=4)

array([[1., 0., 0., 0.],

[0., 1., 0., 0.],

[0., 0., 1., 0.],

[0., 0., 0., 1.]])

or a diagonal array:

I = np.eye(N=3, dtype=int)

I[1, 1] = 2

I[2, 2] = 3

I

array([[1, 0, 0],

[0, 2, 0],

[0, 0, 3]])

np.diag([1, 2, 3]) * 1.0

array([[1., 0., 0.],

[0., 2., 0.],

[0., 0., 3.]])

If you need a lower triangular array:

np.ones((3, 3)) - np.tri(3) + np.eye(3)

array([[1., 1., 1.],

[0., 1., 1.],

[0., 0., 1.]])

np.triu(np.ones(3)) # How to get an upper triangle array

array([[1., 1., 1.],

[0., 1., 1.],

[0., 0., 1.]])

Regular Algebra with arrays#

It takes some getting use to how to use arrays with algebra.

Addition and subtraction#

Let’s start with addition and subtraction. A good rule to remember that you can add and subtract arrays with the same shape.

A

array([[1, 2],

[3, 4]])

B = np.ones(A.shape)

A + B

array([[2., 3.],

[4., 5.]])

A - B

array([[0., 1.],

[2., 3.]])

This is an error though because the shapes do not match.

C = np.array([[0, 0, 1], [1, 0, 0]])

A + C

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[16], line 3

1 C = np.array([[0, 0, 1], [1, 0, 0]])

----> 3 A + C

ValueError: operands could not be broadcast together with shapes (2,2) (2,3)

Note, however, that this is ok. This feature is called broadcasting. It works when the thing you are adding can be added to each row.

C * np.array([2, 2, 2])

array([[0, 0, 2],

[2, 0, 0]])

np.array([[[1], [1]]]).shape

(1, 2, 1)

Exercise Use some algebra to get an array that is ones above the main diagonal, and zeros everywhere else.

np.triu(np.ones(3), k=1)

array([[0., 1., 1.],

[0., 0., 1.],

[0., 0., 0.]])

np.ones((3, 3)) - np.tri(3)

array([[0., 1., 1.],

[0., 0., 1.],

[0., 0., 0.]])

Multiplication and division#

The default multiplication and division operators work element-wise.

A

array([[1, 2],

[3, 4]])

2 * A

array([[2, 4],

[6, 8]])

1 / A

array([[1. , 0.5 ],

[0.33333333, 0.25 ]])

A * B

array([[1., 2.],

[3., 4.]])

B / A

array([[1. , 0.5 ],

[0.33333333, 0.25 ]])

A % 2

array([[1, 0],

[1, 0]])

A**2

array([[ 1, 4],

[ 9, 16]])

np.sqrt(A)

array([[1. , 1.41421356],

[1.73205081, 2. ]])

Matrix algebra#

To do matrix multiplication you use the @ operator (This is new in Python 3.5), or the numpy.dot function. If you are not familiar with the idea of matrix multiplication you should review it at https://en.wikipedia.org/wiki/Matrix_multiplication.

We write matrix multiplication as: \(\mathbf{A} \mathbf{B}\). We cannot multiply any two arrays; their shapes must follow some rules. We can multiply any two arrays with these shapes:

(m, c) * (c, n) = (m, n)

In other words the number of columns in the first array must equal the number of rows in the second array. This means it is not generally true that \(\mathbf{A} \mathbf{B} = \mathbf{B} \mathbf{A}\).

A.shape, B.shape

((2, 2), (2, 2))

A

array([[1, 2],

[3, 4]])

B

array([[1., 1.],

[1., 1.]])

A @ B

array([[3., 3.],

[7., 7.]])

This is the older way to do matrix multiplication.

np.dot(A, B)

array([[3., 3.],

[7., 7.]])

These rules are true:

\((k \mathbf{A})\mathbf{B} = k(\mathbf{A} \mathbf{B}) = \mathbf{A}(k\mathbf{B})\)

\(\mathbf{A}(\mathbf{B}\mathbf{C}) = (\mathbf{A}\mathbf{B})\mathbf{C}\)

\((\mathbf{A} + \mathbf{B})\mathbf{C} = \mathbf{A}\mathbf{B} + \mathbf{A}\mathbf{C}\)

\(\mathbf{C}(\mathbf{A} + \mathbf{B}) = \mathbf{C}\mathbf{A} + \mathbf{C}\mathbf{B}\)

Exercise construct examples of each of these rules.

We can also multiply a matrix and vector. This is like the shapes of (m, r) * (r, 1) = (m, 1)

k = 2

(k * A) @ B == k * (A @ B)

array([[ True, True],

[ True, True]])

# Checking rule #4

C = np.random.random((2, 2))

C

np.allclose((C @ (A + B)), (C @ A + C @ B))

True

x = np.array([1, 2])

A @ x

array([ 5, 11])

There is a small subtle point, the x-array is 1-D:

x.shape

(2,)

Its shape is not (2, 1)! Numpy does the right thing here and figures out what you want. Not all languages allow this, however, and you have to be careful that everything has the right shape with them.

Linear algebra functions of arrays#

The transpose#

In the transpose operation you swap the rows and columns of an array. The transpose of A is denoted \(\mathbf{A}^T\).

A

array([[1, 2],

[3, 4]])

A.T

array([[1, 3],

[2, 4]])

There is also a function for transposing.

np.transpose(A)

array([[1, 3],

[2, 4]])

A matrix is called symmetric if it is equal to its transpose: \(\mathbf{A} == \mathbf{A}^T\).

Q = np.array([[1, 2], [2, 4]])

np.allclose(Q, Q.T)

True

A matrix is called skew symmetric if \(\mathbf{A}^T = -\mathbf{A}\).

Q = np.array([[0, 1], [-1, 0]])

np.allclose(Q.T, -Q)

True

A matrix is called orthogonal if this equation is true: \(\mathbf{A} \mathbf{A}^T = \mathbf{I}\). Here is an example of an orthogonal matrix:

theta = 120

Q = np.array([[np.cos(theta), -np.sin(theta)], [np.sin(theta), np.cos(theta)]])

with np.printoptions(suppress=True):

print(Q @ Q.T)

[[1. 0.]

[0. 1.]]

Here are the four rules for matrix multiplication and transposition

\((\mathbf{A}^T)^T = \mathbf{A}\)

\((\mathbf{A}+\mathbf{B})^T = \mathbf{A}^T+\mathbf{B}^T\)

\((\mathit{c}\mathbf{A})^T = \mathit{c}\mathbf{A}^T\)

\((\mathbf{AB})^T = \mathbf{B}^T\mathbf{A}^T\)

Exercise Come up with an example for each rule.

# rule #4

A = np.random.random((30, 30))

B = np.random.random((30, 30))

np.max(np.abs((A @ B).T - B.T @ A.T))

np.float64(0.0)

The determinant#

The determinant of a matrix is noted: det(A) or |A|. Many matrices are used to linearly transform vectors, and the determinant is related to the scaling magnitude.

np.linalg.det(A)

np.float64(-0.787371826189322)

The inverse#

A matrix is invertible if and only if the determinant of the matrix is non-zero.

The inverse is defined by: \(\mathbf{A} \mathbf{A}^{-1} = \mathbf{I}\).

We compute the inverse as:

A = np.random.random((3, 3))

np.linalg.inv(A)

array([[-0.68390497, -0.295605 , 1.51136264],

[-0.39361047, 1.65863329, -1.73907159],

[ 1.24767767, -0.27265231, 0.15859802]])

And here verify the definition.

with np.printoptions(suppress=True):

print(A @ np.linalg.inv(A))

[[ 1. -0. 0.]

[ 0. 1. 0.]

[ 0. -0. 1.]]

Another way to define an orthogonal matrix is \(\mathbf{A}^T = \mathbf{A}^{-1}\).

A A.T = I

A.inv A A.T = A.inv I = A.inv

I A.T = A.inv

A.T = A.inv

theta = 12

Q = np.array([[np.cos(theta), -np.sin(theta)], [np.sin(theta), np.cos(theta)]])

np.allclose(Q.T, np.linalg.inv(Q))

True

Rank#

The rank of a matrix is equal to the number of linearly independent rows in it. Rows are linearly independent if and only if they cannot be made by constants times another row or linear combinations of other rows.

np.linalg.matrix_rank(A)

np.int64(3)

Here is an example of a rank-deficient array. The last row is a linear combination of the first two rows.

A1 = [[1, 2, 3], [0, 2, 3], [2, 6, 9]]

np.linalg.matrix_rank(A1)

np.int64(2)

Here is an example of a rank-deficient array. It is deficient because the last row is just 0 times any other row.

A1 = [[1, 2, 3], [0, 2, 3], [0, 0, 0]]

np.linalg.matrix_rank(A1)

np.int64(2)

Note the determinant of this array is nearly zero as a result.

np.linalg.det(A1)

np.float64(0.0)

Also note the inverse may be undefined or have some enormous numbers in it. This is not a reliable inverse. It is never a good idea to have giant numbers and small numbers in the same calculations!

np.linalg.inv(A1)

---------------------------------------------------------------------------

LinAlgError Traceback (most recent call last)

Cell In[53], line 1

----> 1 np.linalg.inv(A1)

File /opt/hostedtoolcache/Python/3.11.14/x64/lib/python3.11/site-packages/numpy/linalg/_linalg.py:648, in inv(a)

645 signature = 'D->D' if isComplexType(t) else 'd->d'

646 with errstate(call=_raise_linalgerror_singular, invalid='call',

647 over='ignore', divide='ignore', under='ignore'):

--> 648 ainv = _umath_linalg.inv(a, signature=signature)

649 return wrap(ainv.astype(result_t, copy=False))

File /opt/hostedtoolcache/Python/3.11.14/x64/lib/python3.11/site-packages/numpy/linalg/_linalg.py:145, in _raise_linalgerror_singular(err, flag)

144 def _raise_linalgerror_singular(err, flag):

--> 145 raise LinAlgError("Singular matrix")

LinAlgError: Singular matrix

The condition number is a measure of the norm of an array times the inverse of the array. If it is very large, the array is said to be ill-conditioned.

np.linalg.cond(A1)

np.float64(inf)

What all of these mean is that we only have two independent rows in the array.

Solving linear algebraic equations#

One of the key reasons to develop the tools above is for solving linear equations. Let’s consider an example.

Given these equations, find [x1, x2, x3]

\(x_1 - x_2 + x_3 = 0\)

\(10 x_2 + 25 x_3 = 90\)

\(20 x_1 + 10 x_2 = 80\)

reference: Kreysig, Advanced Engineering Mathematics, 9th ed. Sec. 7.3

First, we express this in the form \(\mathbf{A} \mathbf{x} = \mathbf{b}\).

A = np.array([[1, -1, 1], [0, 10, 25], [20, 10, 0]])

b = np.array([0, 90, 80])

Now, if we left multiply by \(\mathbf{A}^{-1}\) then we get:

\(\mathbf{A}^{-1} \mathbf{A} \mathbf{x} = \mathbf{A}^{-1} \mathbf{b}\) which simplifies to:

\(\mathbf{x} = \mathbf{A}^{-1} \mathbf{b}\)

How do we know if there should be a solution? First we make the augmented matrix \(\mathbf{A} | \mathbf{b}\). Note for this we need \mathbf{b} as a column vector. Here is one way to make that happen. We make it a row in a 2D array, and transpose that to make it a column.

Awiggle = np.hstack([A, np.array([b]).T])

Awiggle

array([[ 1, -1, 1, 0],

[ 0, 10, 25, 90],

[20, 10, 0, 80]])

If the rank of \(\mathbf{A}\) and the rank of \(\mathbf{\tilde{A}}\) are the same, then we will have one unique solution. if the rank is less than the number of unknowns, there maybe an infinite number of solutions.

np.linalg.matrix_rank(A), np.linalg.matrix_rank(Awiggle)

(np.int64(3), np.int64(3))

If \(\mathbf{b}\) is not all zeros, we can also use the fact that a non-zero determinant leads to a unique solution.

np.linalg.det(A)

np.float64(-950.0000000000001)

It should also be evident that since we use an inverse matrix, it must exist (which is certain since the determinant is non-zero). Now we can evaluate our solution.

x = np.linalg.inv(A) @ b

x

array([2., 4., 2.])

Now you might see why we vastly prefer linear algebra to nonlinear algebra; there is no guessing or iteration, we just solve the equations!

Let us confirm our solution:

A @ x == b

array([False, True, False])

This fails because of float tolerances:

[float(z) for z in A @ x - b] # subtle point that sometimes small numbers print as zero

[4.440892098500626e-16, 0.0, 1.4210854715202004e-14]

We should instead see if they are all close. You could roll your own comparison, but we instead leverage numpy.allclose for this comparison.

np.allclose(A @ x, b)

True

The formula we used above to solve for \(\mathbf{x}\) is not commonly used. It turns out computing the inverse of a matrix is moderately expensive. For small systems it is negligible, but the time to compute the inverse grows as \(N^3\), and there are more efficient ways to solve these when the number of equations grows large.

import numpy as np

import time

t = []

I = np.array(range(2, 5001, 50))

for i in I:

m = np.eye(i)

t0 = time.time()

np.linalg.inv(m)

t += [time.time() - t0]

import matplotlib.pyplot as plt

plt.plot(I, t)

plt.xlabel("N")

plt.ylabel("Time to invert (s)");

As usual, there is a function we can use to solve this.

np.linalg.solve(A, b)

array([2., 4., 2.])

t = []

I = np.array(range(2, 5001, 500))

for i in I:

A = np.eye(i)

b = np.arange(i)

t0 = time.time()

np.linalg.solve(A, b)

t += [time.time() - t0]

plt.plot(I, t)

plt.xlabel("N")

plt.ylabel("Time to solve Ax=b (s)");

You can see by inspection that solve must not be using an inverse to solve these equations; if it did, it would take much longer to solve them. It is remarkable that we can solve ~5000 simultaneous equations here in about 1 second!

This may seem like a lot of equations, but it isn’t really. Problems of this size routinely come up in solving linear boundary value problems where you discretize the problem into a large number of linear equations that are solved.

Summary#

Today we introduced many functions used in linear algebra. One of the main applications of linear algebra is solving linear equations. These arise in many engineering applications like mass balances, reaction network analysis, etc. Because we can solve them directly (not iteratively with a guess like with non-linear algebra) it is highly desirable to formulate problems as linear ones where possible.

There are many more specialized routines at https://docs.scipy.org/doc/numpy-1.15.1/reference/routines.linalg.html.